In the dynamic landscape of modern occupations, staying abreast of the latest tools and technologies is paramount for professionals seeking to excel in their respective fields. One such crucial aspect is understanding the tools and technology used in a particular occupation. This knowledge not only enhances efficiency but also empowers individuals to adapt and innovate in an ever-evolving work environment. In this context, the question arises: “What tools and technology are used in this occupation?”

This inquiry serves as a gateway to unraveling the intricacies of diverse professions, shedding light on the instrumental elements that contribute to success in the chosen field. Let’s delve into the specifics and explore the tools and technology that play a pivotal role in shaping the landscape of various occupations.

Natural Language Processing (NLP)

Natural Language Processing (NLP) is a subfield of artificial intelligence that focuses on the interaction between computers and human language. The primary goal of NLP machine learning is to enable machines to understand, interpret, and generate human-like text or speech. This interdisciplinary field draws upon linguistics, computer science, and cognitive psychology to develop algorithms and models capable of processing natural language data.

Key Components of Natural Language Processing:

| Component | Description | Importance |

| Tokenization | Breaks text into smaller units (tokens). | Enables analysis of individual elements in a text. |

| Part-of-Speech Tagging | Assigns grammatical categories to words. | Aids in understanding syntactic structure. |

| Named Entity Recognition | Identifies and classifies entities in text. | Extracts structured information and context. |

| Syntax and Parsing | Analyzes grammatical structure and word relationships. | Helps understand hierarchical structure in sentences. |

| Semantic Analysis | Extracts meaning by understanding word relationships. | Facilitates comprehension of context and intent. |

| Sentiment Analysis | Determines sentiment in text (positive, negative). | Useful for gauging public opinion and feedback. |

| Machine Translation | Translates text between languages. | Facilitates cross-language communication. |

| Information Retrieval | Finds and presents relevant information. | Essential for search engines and question-answering. |

| Speech Recognition | Converts spoken language into written text. | Enables hands-free operation and transcription services. |

Cloud Computing Platforms

Google cloud computing platforms have revolutionized the way businesses and individuals access, manage, and deploy computing resources. These platforms offer a scalable and flexible infrastructure that enables users to leverage computing power, storage, and other services without the need for physical hardware ownership.

Key Features and Services

Cloud computing platforms provide a comprehensive suite of cloud services providers, including Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). Users can dynamically scale their resources based on demand, enjoy cost efficiencies through pay-as-you-go models, and take advantage of a wide range of tools and applications offered by the platform.

Leading Cloud Providers

The market for cloud computing services is dominated by major providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). Each of these platforms has its strengths, catering to diverse user needs and industries. As businesses increasingly migrate to the cloud, choosing the right platform becomes crucial for optimizing performance, security, and overall operational efficiency.

Machine Learning and Deep Learning Frameworks

Machine learning (ML) and deep learning with python frameworks are essential tools for developing and deploying intelligent applications. These frameworks provide the necessary infrastructure and tools for building, training, and deploying machine learning models. Here, I’ll provide an overview of some popular machine learning and deep learning frameworks, along with details about each:

| FrameworkType | Framework | Key Points |

| Machine Learning | TensorFlow | – Developed by Google Brain<br>- Supports both deep learning and traditional machine learning<br>- Widely used in research and industry |

| Machine Learning | Scikit-learn | – Simple and easy to use<br>- Focus on traditional machine learning algorithms<br>- Excellent documentation and community support |

| Machine Learning | PyTorch | – Developed by Facebook’s AI Research lab (FAIR)<br>- Dynamic computational graph allows for more flexibility<br>- Gaining popularity in research and academia |

| Deep Learning | TensorFlow | – Widely used for deep learning tasks<br>- Provides high-level APIs like Keras for ease of use<br>- Strong community support and extensive resources |

| Deep Learning | PyTorch | – Dynamic computation graph for flexibility<br>- Popular in research and academia<br>- Easier to understand and debug than TensorFlow for some users |

| Deep Learning | Keras | – High-level neural networks API<br>- Can run on top of TensorFlow or Theano<br>- Designed for quick experimentation and prototyping |

Programming Languages

Programming languages are tools that developers use to instruct computers to perform specific tasks. There are numerous r programming languages, each with its own syntax, semantics, and use cases. Here’s a brief overview of some popular rust programming languages:

| Programming Language | Primary Use |

| Python | General-purpose programming, scripting, automation |

| Java | Enterprise applications, Android development |

| C++ | System-level programming, game development |

| JavaScript | Web development, client-side scripting |

| Ruby | Web development, automation, scripting |

| Swift | iOS and macOS app development |

| C# | Windows application development, game development |

| Go | Systems programming, cloud computing |

| PHP | Server-side web development |

| Rust | Systems programming, performance-critical tasks |

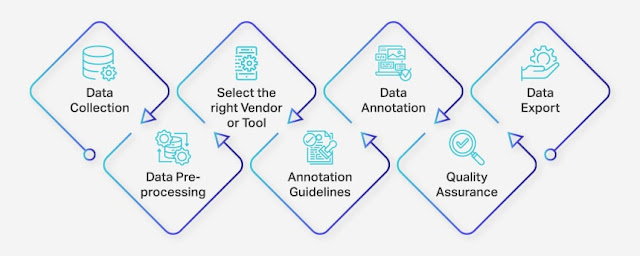

Data Annotation and Preprocessing Tools

Data annotation companies and preprocessing are crucial steps in the machine learning pipeline, playing a pivotal role in ensuring the quality and effectiveness of models. Annotation involves labeling raw data to provide the necessary context for learn python for machine learning algorithms, while preprocessing focuses on cleaning, transforming, and organizing data to enhance its suitability for model training. In this context, specialized tools have been developed to streamline and facilitate these processes.

Data Annotation Tools

Labelbox is a versatile data annotation platform that supports various annotation ai types such as image classification, object detection, and segmentation. It provides a user-friendly interface for annotators and offers collaboration features to streamline teamwork. Labelbox also integrates with popular machine learning frameworks, allowing seamless integration into the model development workflow.

VGG Image Annotator (VIA)

VIA is an open-source annotation tool that enables users to vgg images annotate for object detection, segmentation, and classification tasks. It supports multiple annotation formats and is customizable to suit specific project requirements. VIA is particularly beneficial for research projects and small-scale annotation tasks.

Prodigy

Prodigy is an annotation tool that combines manual and active learning approaches. It allows users to create custom annotation workflows and leverages active learning to intelligently select examples for annotation, optimizing the annotation process. The prodigy supports various annotation types and is suitable for both small and large-scale projects.

Data Preprocessing Tools

Pandas is a widely used Python library for data manipulation and analysis. It provides data structures like DataFrame, which facilitates cleaning, transforming, convolutional neural networks and organizing data. With its extensive functionality, Pandas is an essential tool for preprocessing tasks such as handling missing values, filtering data, and creating feature engineering pipelines.

Scikit-learn

Scikit-learn is a machine learning library that includes modules for data preprocessing. It offers tools for scaling, encoding categorical variables, sklearn and handling imbalanced datasets. learn scikit-learn integrates seamlessly with other machine learning frameworks, making it a popular choice for preprocessing tasks in the machine learning community.

Tensor Flow Data Validation (TFDV)

TFDV is a component of the Tensor Flow Extended (TFX) ecosystem that focuses on data validation for machine learning pipelines. It helps identify and address issues in training and serving datasets, ensuring consistency and quality. TFDV is particularly useful for handling schema drift, missing values, and data anomalies during preprocessing.

Also, Read More: What Is a Point of Focus During Health Inspection?

APIs for Model Integration

API (Application Programming Interfaces) play a crucial role in model integration by enabling communication and interaction between different software components, postman, systems, or services. When it comes to integrating models into applications, several types of APIs are important. Here are some key APIs used for model integration:

RESTful APIs (Representational State Transfer)

Definition: RESTful APIs are a type of web openai api that adheres to the principles of REST architecture. They use standard HTTP methods (GET, POST, PUT, DELETE) for communication and often exchange data in JSON or XML format.

Importance: RESTful APIs are widely used for model integration due to their simplicity, scalability, and platform independence. They are suitable for a variety of applications vulkan and are easy to integrate into web services.

GraphQL APIs

Definition: GraphQL is a query language and runtime for google map api that allows clients to request only the data they need. It provides a more efficient and flexible alternative to traditional REST APIs.

Importance: GraphQL is valuable for model integration when fine-grained control over data retrieval is required. It enables clients to specify the exact structure and format of the response, webhook reducing over-fetching or under-fetching of data.

gRPC (gRPC Remote Procedure Calls)

Definition: gRPC is a high-performance RPC (Remote Procedure Call) framework developed by Google. It uses HTTP/2 as the transport protocol and Protocol Buffers as the interface definition language.

Importance: gRPC is efficient and suitable for real-time applications where low latency and high performance are critical. It is commonly used in microservices architectures graphql and facilitates communication between services.

WebSocket APIs

Definition: WebSocket is a communication protocol that provides full-duplex communication channels over a single, long-lived connection. It is often used for real-time applications.

Importance: WebSocket rest api are important for integrating models in real-time applications where continuous updates or bidirectional communication is required. They are suitable for applications like chat applications, live streaming, and collaborative editing.

Python APIs (e.g., Flask, FastAPI)

Definition: Python APIs, built using frameworks like Flask or FastAPI, provide a way to expose model functionality through HTTP endpoints.

Importance: Python APIs are commonly used for integrating machine learning models trained using popular frameworks like TensorFlow or PyTorch. They offer a straightforward way to expose models as web services, chat gpt api making it easy for other applications to consume their predictions.

Custom APIs

Definition: In some cases, organizations may develop custom open api tailored to their specific needs, protocols, or data formats.

Importance: Custom APIs allow for flexibility and can be designed to meet specific requirements. They are particularly useful when dealing with unique integration scenarios spotify api or specialized models.

Collaboration and Version Control

Collaboration and version control are essential components in modern software development, enabling teams to work efficiently and seamlessly on projects. Collaboration involves the coordinated effort of multiple individuals, fostering communication, idea sharing, google jamboard and collective problem-solving. Version control systems, on the other hand, manage changes to a project’s codebase over time, allowing developers to track, revert, and merge alterations.

This ensures that everyone on the team is working with the latest code and provides a structured framework for collaboration tools. Popular version control tools such as Git have become integral to the development process, allowing developers to work concurrently on different aspects of a project while maintaining a unified and organized codebase. The combination of effective collaboration and version control enhances productivity, minimizes errors, and promotes a smooth development workflow.

Conclusion

Professionals in this occupation leverage a diverse array of tools and technologies to enhance their work. The specific tools employed ota salary vary widely based on the nature of the occupation, but common elements include advanced software applications, specialized equipment, and emerging technologies. These tools collectively enable professionals to streamline processes, analyze data, and stay at the forefront of their respective fields, contributing to the overall efficiency and innovation within the high paying occupation.

Frequently Asked Questions

Q: Are there any standard technologies professionals in this occupation should be familiar with?

Ans: Yes, staying updated with industry-standard technologies is crucial. Professionals in this occupation often need proficiency in tools like CAD software, project management tools, data analysis tools, or programming languages, depending on the nature of their work.

Q: How important is proficiency in software applications for this occupation?

Ans: Proficiency in relevant software applications is often integral to success in this occupation. Professionals may use software for tasks such as data analysis, design, simulation, or project management. Keeping skills up-to-date with the latest software advancements is highly recommended.

Q: Do professionals in this occupation need to be familiar with emerging technologies?

Ans: Yes, staying abreast of emerging technologies is essential. The rapid evolution of technology often introduces new tools and methods. Professionals should be proactive in learning and adapting to these advancements to remain competitive and enhance their capabilities.

Q: Are there any specialized technologies unique to certain niches within this occupation?

Ans: Yes, certain niches within this occupation may have specialized tools or technologies tailored to their specific needs. For example, professionals in fields like artificial intelligence, robotics, or biotechnology may use highly specialized tools relevant to their area of expertise.

Q: How can professionals in this occupation stay updated on the latest tools and technologies?

Ans: To stay updated, professionals can attend conferences, workshops, and webinars, participate in online forums, and engage in continuous learning through courses and certifications. Following industry publications and networking with peers can also provide valuable insights into emerging tools and technologies.